Abstract

This paper focuses on improving rainfall-runoff forecasts by a combination of genetic programming (GP) and basic hydrological modelling concepts. GP is a general optimisation technique for making an automated search of a computer program that solves some particular problem. The SORD! program was developed for the purposes of this study (in the R programming language). It is an implementation of canonical GP. Special functions are used for a combined approach of hydrological concepts and GP. The special functions are a reservoir model, a simple moving average model, and a cumulative sum and delay operator. The efficiency of the approach presented here is tested on runoff predictions for five catchments of various sizes. The input data consists of daily rainfall and runoff series. The forecast step is one day. The performance of the proposed approach is compared with the results of the artificial neural network model (ANN) and with the GP model without special functions. GP combined with these concepts provides satisfactory performance, and the simulations seem to be more accurate than the results of ANN and GP without these functions. An additional advantage of the proposed approach is that it is not necessary to determine the input lag, and there is better convergence. The SORD! program provides an easy-to-use alternative for data-oriented modelling combined with simple concepts used in hydrological modelling.

Similar content being viewed by others

1 Introduction

Genetic programming (GP) is a potential evolutionary machine learning technique that automatically solves problems without requiring the user to know or specify the form or structure of the solution in advance [1]. Since the introduction of GP by Koza [2] it has been applied in many fields of science and also in real world problems [1].

GP is also becoming popular in the hydrological sciences. The main uses of GP in hydrology are for modelling various processes, e.g. rainfall, runoff, evapotranspiration, hydropedology, oceanology, etc.

One of the most important tasks of hydrological modelling is in predicting floods and runoff from catchments in general. This is quite a difficult rainfall-runoff process modelling task, due to the highly nonlinear, time- and spatially-varying nature of the process. In addition, it is demanding and expensive to measure the processes that affect runoff, which means that good quality data is scarce. The data is often noisy, badly spatially distributed or completely missing. In addition, there is often a very urgent need for good modelling results.

Thus it is more satisfactory to use data-driven models than expensive physical models (in terms of both data availability and computation time), despite the disadvantages of data-driven models. In addition, conceptual models are still more expensive to use in bad conditions than lightweight data-driven models. In the context of hydrological modelling GP can be classified as a data-driven modelling technique. GP has recently begun to be used for modelling rainfall-runoff processes. Successful applications and a brief history of the use of GP in rainfall-runoff modelling will be presented in Sect. 3.

The main objective of this study is to improve the efficiency of runoff forecasting with the use of GP. An investigation is made of the design and use of canonical GP in combination with simple hydrological concepts in rainfall-runoff modelling tasks. This approach combines the advantages of a data-driven GP model with the efficiency of conceptual models and improves the effectiveness of structure and input optimising modelling technique.

The rainfall-runoff modelling efficiency of the proposed approach is tested on five catchments in the USA. This is a preliminary study for further application of this approach on some Czech catchments where it is difficult to forecast the runoff, and where data-driven models (especially GP-type models) are rarely applied. Improved forecast efficiency is needed on some Czech catchments in order to provide improved flood protection.

The paper is organised as follows: Sects. 2 and 3 provide a brief description of GP and its applications in rainfall-runoff modelling. Section 4 presents the catchments and datasets used in the tests. The methodology is described in Sect. 5. The development of the model is presented in 5.1, and the settings of the models are presented in 5.2. In Sect. 6, the results are presented and analysed. The paper closes with some conclusions.

2 Genetic programming

GP is one of the stochastic search procedures known as evolutionary algorithms (EA) belonging to the field of evolutionary computing. EAs are traditionally represented by evolutionary programming, genetic algorithms (GA), evolutionary strategies and genetic programming [3]. The common feature of EA is the application of Darwinian principles to automatic problem solving.

GP, the youngest method of EA, is derived from GA. The main differences between GP and GA are in the representation of individuals (GA—string of numbers, GP—computer program or equation) and in the subject that is optimised (GA optimises the parameters of the objective function, GP optimises both the parameters and the structure of the objective function). GP is considered as a generalisation of GA [4].

GP is a general optimisation method. The main objective of GP is to discover the relations between the input and output data. This is achieved by a simulated evolution process which leads to an executable computer program containing both the values (inputs and constants) and the functions. Accordingly to [5], GP is about breeding the computer program. In the symbolic regression field (to which rainfall-runoff modelling belongs), it is more precise to refer to GP individuals as functions or equations, rather than as computer programs.

In GP, the population of individuals (syntax trees in canonical GP) is progressively improved from generation to generation by selection and variation of the best individuals, whose offspring proceed to the next generation (often together with the best parents).

The first generation is created randomly from a specified number of individuals of a certain size. The individuals are syntax trees assembled by terminals (constants and inputs) and functions. The individuals are evaluated by a fitness function, which represents the efficiency with which the individual solves a given problem. After an evaluation, the selection of parents is processed (commonly some type of tournament). Then comes the crossover and mutation (common GP variation operators) of the selected parents, and offspring are created. The new generation is filled with offspring, and sometimes also with best parents (replication and elitism), and the evolution cycle is allowed to continue until the stopping criterion (time, fitness value, number of generations) is reached.

3 GP in rainfall-runoff modelling

Early evidence of the application of GP in rainfall-runoff modelling can be found in [6]. Further pioneering works are [7–9]. In these studies, synthetic rainfall-runoff series are used for modelling.

One of the first studies on application of GP with real data sets is [10], in which daily forecast of runoff was conducted for the Lindenborg catchment, Denmark (130.2 km\(^{2}\)). Results of GP modelling were also compared with those from an ANN model (and also with naive model and NAM model). It was concluded that none of the two data-driven techniques performed significantly better than the other.

The comparison between ANN and canonical GP techniques applied for real runoff data modelling has been made in [11]. In this work GP was applied for flow prediction for the Kirkton catchment in Scotland (6.85 km\(^{2}\)). The input variables were rainfall, streamflow and Penman open water evaporation. The results of ANN and GP (data-driven techniques) were compared with those from a calibrated conceptual model (HYRROM). The results of GP and ANN were again similar and show a very good agreement with the results of the conceptual model.

A combination of GP and conceptual models in the sense of using GP for calibration of a conceptual hydrological model (optimisation of model parameters) and correction of its systematical errors is presented in [12]. Multi-step runoff prediction on series with a short time step and with a detailed analysis of the derived models is presented in [13]. Studies on testing GP for a one-day runoff forecast are [14, 15]. A combined GP and ANN approach to rainfall-runoff modelling on an urbanized catchment is applied in [16].

The comparison of ANN and GP for the catchment of Choke Canyon reservoir (cca 15,000 km\(^{2}\)) in south Texas presented [17]. The forecast steps were 3, 7 and 30 days. In this study 15 scenarios were formed for the modelling analysis. Up to 20 different hydrometeorological characteristics were used as inputs. The ANN derived models were generally worse than the GP derived models.

The Indian catchment Narmada (98,796 km\(^{2}\)) was used for runoff modelling in [18]. Two modelling scenarios were performed—first for 1-day forecast of runoff (input data were runoff in daily step) for monsoon season and second scenario for non-monsoon season. The results of GP were compared with ANN. Both modelling techniques forecast streamflow with reasonable accuracy but GP performed better than ANN.

Forecast of monthly discharge series was performed in [19] by using several artificial intelligence methods including ANN and GP. Two river sites in south China were used—Manwan Hydropower in Mekong River with catchment area 114,500 km\(^{2}\) and Hongjiadu Hydropower on Wujiang River with catchment area 9,900 km\(^{2}\). In the site of Manwan dam, the performance of ANN and GP was similar, in the site of Hongjiadu dam, the GP performed better.

In study [20] a GP forecasting system that takes the input values from ground stations and also from radar were presented.

The study [21] was carried out in the West Malian River catchment, which is located in northeast China. The annual streamflow values and climatic data (precipitation and evapotranspiration) were used as inputs. Results showed that the performance of GP method was generally better than other methods including ANN.

Weekly forecast of streamflow by GP using multi-site rainfall presented [22]. A comparative studies of some data-driven models and some experimental investigations are [23–25].

Data as inputs [20] introduces a GP forecasting system that takes the input values from ground stations and also from radar. Predictions over longer time periods are studied in [21] (annual runoff), [22] (weekly runoff), [19] (monthly runoff). A comparative study of some data-driven models and some experimental investigations is [19] as well as [23–25].

The most recent works are [26], where a synthesis of wavelet-based multi-resolution decomposition and genetic programming are proposed and are used for forecasting monthly stream flow, and [27], where GP is used to predict water flow and transport of solids.

Earlier literature reviews mention studies that used canonical GP. In a broader sense of GP methods, we can note [28], which used grammatically-based GP, [29], which used gene expression programming (GEP) and [30] which applied linear GP (LGP). A comparison of the performance of GEP and ANN (input determination by GA) in estimating daily flows is presented in [31]. Among recent works, flood discharges have been estimated using GEP and LGP on ungauged stream flows in Turkey [32].

To the best of the authors’ knowledge there is no work dealing with more specific hydrological concepts as functions and with automatic input lag determination. Lag is often determined by means of statistical methods (cross correlation e.g. [19, 20, 31, 33], mutual information e.g. [23, 24]) or as a static set [12, 14, 28, 29]. The function as a moving average is used in a small number of studies (but acting as terminals with a predefined time window), e.g. [28].

4 Datasets

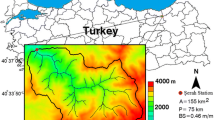

The efficiency of the GPF model was tested on data for five catchments. The catchments were chosen with respect to the catchment area (from cca 100–10,000 km\({^2}\)) from among US catchments observed by the US Geological Survey (USGS). This data is available under the MOPEX project [34]. The second condition for choosing a catchment was sufficient length of records.

The following catchments were chosen for modelling: Chehalis River, East Fork White River (EFW), Leaf River, Linville River and Smith River. A training dataset of 7,300 records of mean area precipitation (P) and stream flow discharge (Q) was created for each catchment. The validation datasets consist of the same variables, and the length is 12,606 records for each catchment. More precisely, the period 1st January 1948–26th December 1967 considered as a training period for all catchments (except EFW River) and the period 27th December 1967–1st July 2002 as a validation period. The EFW River catchment training and validation datasets consist of data in range 1st April 1948–26th March 1968 and 27th March 1968–30th September 2002, respectively. The time-step of the datasets is one day. The P and Q values in the datasets are derived from direct measurements. A brief description of the catchments is presented in Table 1. The training and validation sets were chosen from original datasets so that no gaps were included in whole range of training and validation time series.

The basic statistical characteristics of the P and Q series in the training and validation datasets are presented in Tables 2 and 3 respectively.

5 Methods

This paper introduces an original approach to rainfall-runoff modelling— a combination of simple hydrological modelling concepts in a genetic programming framework. The hydrological concepts included in the GP frame act as more or less complex functions with a common time shift element. A hybrid modelling approach combining GP and complex functions with a time shift (GPF) is proposed and tested. An improvement in the efficiency of rainfall-runoff modelling is expected with the use of this approach.

The special functions of GPF are simple reservoir function (RES), simple moving average (SMA), cumulative sum (CSUM) and delay operator (DLY). A precise description of these functions is presented in Sect. 5.1. These functions are suitable for rainfall-runoff modelling (and for time-series modelling in general) because memory of the process is to some extent included in these functions. This is advantageous in time-series modelling, where continual series are used, but it prevents changes in series ordering and therefore also the use of some techniques for improving the learning capability of the GP process (e.g. data sampling). In addition, the use of the proposed functions with a time shift does not require the model inputs lag to be determined. More precisely, the lag determination process is an object of the GP optimisation process.

The GPF approach also has some disadvantages. The use of a more complex function is more time consuming, and the choice of a function set is even more problem-dependent. Due to the complex time shift functions, a GPF-derived model is less straightforward to interpret than models derived via standard GP.

The main goals of this study are as follows. The first goal is to develop a GP tool suitable for combining with the functions mentioned here (a tool that allows easy implementation of users’ functions). The second goal is to test the efficiency of the GPF approach.

A new genetic programming tool was developed to meet the goals of this study. The name of the tool is SORD! (Solve OR Die!), and it is written mainly in R programming language [35] (the time shift functions are written in FORTRAN 90). A brief description of the SORD! tool is given in Sect. 5.1.

The efficiency of GP with the time-shift functions approach (GPF) is tested against GP with a standard function set (denoted as GP) and against an artificial neural network model (ANN). ANN is widely used as a reference method in rainfall runoff modelling, and accordingly it was also chosen for comparative purposes in our study.

Two rainfall-runoff modelling cases are tested. The modelling case with rainfall inputs, and the case with the rainfall value and the antecedent runoff value as input variables. All data records are in a one-day step, and the forecast is for one day ahead.

The tested methods were compared in sample results of 100 independent runs for each method. The performance of the models is evaluated using three criteria that evaluate the accuracy and the variation of the simulated runoff values. The following criteria were chosen: mean error, mean square error and Nash–Sutcliffe efficiency coefficient NS [36], which is widely used in rainfall-runoff modelling. NS is defined in Table 5.

5.1 Description of the SORD! program

The SORD! GP tool was developed for modelling purposes. The SORD! program is written in R programming language. More precisely the program and the standard functions are written in R, while the special functions of the GPF approach are written in FORTRAN 90 and are used as a library in SORD! These functions are included in the SORD! function set and are described below.

The standard syntax tree GP method is implemented in SORD! The syntax trees are linearised in the form of an array. This array represents an individual, and all GP operators can be applied to representations of this form. The general form of individual (\(\mathbf I \)) in a linearised syntax tree array is given in Eq. (1).

where \(F \vee T\) means that a function or a terminal can be in this position (\(\vee \) counts as logical disjunction), \(in_{a}\) is an array row pointer (pointing to the row in which the function argument is), \(a\) is order of argument (\(a~= 1, 2,\ldots , A_{F}\)), \(A_{F}\) is arity of the function and \(max(A_{F})\) is the maximum value of the used functions—on which the number of columns in the array depends. The empty position is denoted by _ symbol.

The evaluation of the presented types of individual is processed as a symbolic expression in R after the array has been parsed.

The main GP options of SORD! are the number of individuals in the population, the number of generations, the number of program runs (for samples), the fitness metric, the maximal tree depth in the initial population and in evolution, probabilities of variation operators, selection method, function and terminal set.

The variation operators available in SORD! are subtree crossover and three types of mutation: subtree mutation, separation and mutation of a constant.

The tournament, double tournament [37] and controlled double tournament selection methods are implemented. Elitism is also available.

The available functions and terminals of SORD! are presented in Table 4. The functions upper indexed with p in this table refer to protected functions. Protection is needed to avoid program fall during GP computation as a consequence of inconvenient input entering the function.

The time shift function DLY is a simple function with two arguments. The first argument is the vector of the input variable (which may be changed by operations of previous functions), and the second argument is the lag value. DLY returns the vector of the lagged variable. The values of the lags may be from one to the length of the input vector.

The SMA function is the simple moving average, and the CSUM function is the cumulative sum. The input to these functions is the vector of the variable and the value of the length of the moving window. The SMA function returns the vector of the averaged values by the moving window. The length of the moving window is bounded to values from one to the value corresponding to the length of the input vector. The CSUM function returns the cumulative sum of the values in the moving window. The boundaries are the same as for SMA.

The RES function represents a reservoir model. This model is described by Eqs. (2) and (3).

In these equations \(O\) is the outflow from the reservoir, \(S\) is the storage in the reservoir, \(x\) is the input variable, \(R\) is the reservoir parameter, \(i\) is the time step.

The inputs to RES are the vector of the variable and the reservoir parameter. The outflow from the virtual reservoir is the return of this function. Values of \(R\) equal to zero are changed to \(1\times 10^{-9}, S\) is positive or zero.

The DLY, SMA, CSUM and RES functions work only if the first argument is a vector, otherwise the functions returns the first argument unchanged. The second argument to these functions is an absolute value (all functions), and counts as an integer (not in RES) or a vector of integers (the lag, moving window or reservoir parameter may be a vector, but with same length as the first argument). The evaluation of functions DLY, SMA and CSUM at the beginnings of the input series (i.e. the lag or moving window can be longer than the known vector) is progressive. This means that a shorter input vector is used than the moving window parameter value determines (in the case of SMA and CSUM), or the last known record is used beside the lag parameter value (DLY).

The fitness functions implemented in SORD! are shown in Table 5. In this table \(y_{i}\) is reference data, \(\widehat{y}_{i}\) is modelled data, \(\overline{y}\) is the mean value of the ref. data and \(n\) is number of data items. The Nash–Sutcliffe efficiency coefficient NS and persistence index PI [38] are not used in their standard form, but in an adjusted form which allows optimisation with zero as the optimal solution.

Besides the main properties mentioned above, SORD! provides some weighting schemes to balance the input data. There are three static techniques based on the k-nearest neighbours method, and one based on nonlinearities in the data. The static methods are an implementation of the techniques described in [39]. Dynamic weighting is available through the stepwise adaptation technique proposed in [40].

Two methods for preventing bloat are implemented in SORD!—dynamic maximum tree depth, as described in [41], and the double tournaments mentioned above.

Outputs from SORD! are available in text and pdf form (modelled data series, fitness progress, histograms of fitness in samples, expression of the model, program depth progress).

The average computation time cost is approximately 4 min for GP and 7 min for GPF per one run on 1,000 rows of data, with the settings shown in Table 6. Time cost tests were performed on one core of Intel(R) Core(TM)2 Duo CPU P8700—2.53 GHz, OS—Linux 3.2.0-2-amd64.

The SORD! program is an open source project released under GNU license version 3, and is available for GNU/Linux operating systems. The code can be obtained at [42].

5.2 Setup of the models

Two modelling cases based on the input variables were studied in order to test the performance of GPF in one-day forecasting. In the first modelling case (referred to as P–Q) the inputs consist of precipitation only. In the second case (PQ–Q), the inputs consist of precipitation and antecedent runoff.

GPF works with time shift functions. In order to keep the same conditions for all tested methods it was necessary to identify an appropriate history of precipitation which is to be taken as a input vector for GP and for the ANN model. Appropriate temporal lags of the P inputs were identified as \(lag = max(lag{_{CC}},lag{_{MI}}) + 1\), where \(lag{_{CC}}\) is the lag identified by cross correlation, and \(lag{_{MI}}\) is the lag identified by average mutual information. Using one of these methods to identify inputs is a standard approach in rainfall-runoff modelling, so combining the methods provides a good estimation of important inputs on various catchments. For PQ–Q, it is not necessary to identify the runoff temporal lag, because the first known runoff value is most related to the forecast value.

Using the above formula, the following lags were determined for the training datasets. EFW River and Chehalis River 3 lags, Leaf River 6 lags, Smith and Linville River 2 lags.

On the basis of what was stated above, the two modelling cases for runoff forecasting are defined as follows: The P–Q case is defined as a function \(Q_{t+1} = f(P_{t}, P_{t-1},\ldots , P_{t-lag})\) for the GP and the ANN model. For GPF, the function is more precisely written \(Q_{t+1} = f(P_{t}, P_{t-x1},\ldots , P_{t-xn})\), where \(Q_{t+1}\) is the modelled runoff, \(P_{t-lag}\) is the input lagged precipitation, \(P_{t-xn}\) is the input precipitation at lag \(xn\) which is a subject of GPF optimisation. For the GPF model, the input vector therefore may or may not consist of lagged input values in dependency on the needs of functions with a time shift.

Similarly the PQ–Q case for GP and for the ANN model is defined as \(Q_{t+1} = f(Q_{t}, P_{t}, P_{t-1},\ldots , P_{t-lag})\) and for GPF \(Q_{t+1} = f(Q_{t}, P_{t}, P_{t-x1},\ldots , P_{t-xn}))\), where \(Q_{t}\) is last known runoff value. Since \(Q_{t+1}\) is to a large extent determined by \(Q_{t}\) (due to the large autocorrelation of runoff), for simplicity we did not consider longer lags than one. The GP and GPF model settings are given in Table 6.

The multilayer feed-forward back-propagation ANN model was used as a second comparison technique. The architecture of the net was follows: the input layer with the number of neurons depended on the length of the input vector determined by the lags, two hidden layers with five and three neurons, respectively, and one output neuron. The neuron transfer function was a hyperbolic tangent. A typical back propagation learning algorithm was used. The number of epochs for learning was 500.

All model computations were performed in 100 independent runs. The statistical properties of these 100 runs of each model were compared. The box plots of the validation performance in the mean of the NS criterion were analysed as the main comparison. If there was no obvious difference between the compared results, the two-sample Wilcoxon–Mann–Whitney test of the medians of the samples was performed (at the 95 % confidence level). In addition, performance measures of the models, the scatter plots of the results for the best model, and fitness progress charts are presented and commented. The forecast performance is evaluated using three criteria—mean error (ME), MAE and NS (MAE and NS are described in Table 5, mean error is defined as \( ME = {\frac{1}{n}\sum ^{n}_{i=1} (y_{i} - \widehat{y_{i}}})\)).

6 Results and discussion

The results for the P–Q and PQ–Q modelling cases are presented separately. The results are shown for all five catchments. The best model of ANN, GP and GPF from all samples for the P–Q case is introduced in scatter plots (Fig. 1) and the values of the criteria chosen for both modelling cases are given in Tables 7 and 8. The results for the samples are in box plot form (Figs. 3, 4). The best model from the samples is chosen on the basis of the NS value in training, but all presented values of the criteria come from validation of the models. The progress of the fitness convergence in GP and GPF over the generations in the samples is shown in Figs. 5 and 6.

The criteria for the best model in the P–Q modelling case are shown in Table 7 and for PQ–Q case in Table 8. In the P–Q case the values of all criteria outperform for GPF on all catchments. Only ME for GP on Leaf River is lower than for GPF, but the MAE is higher for GP than for GPF, which indicates that the GP simulated values are slightly more variable than the GPF simulated values.

The ME values show slight tendencies toward overestimation of GPF, and the MAE values show that the GPF results are generally less variable.

In the PQ–Q case, the values of the criteria of the tested models are rather similar. GPF is more accurate than the ANN model, in the sense of NS, on the EFW, Leaf and Smith River catchments. GPF is better than GPF and GP only on EFW and Leaf River. The ME values show that GPF underestimates on more catchments than the reference models. However, there are generally no major differences between all the criteria for the tested models for a given catchment.

The scatter plots of the best model in P–Q modelling are shown in Fig. 1. The scatter plots for models with PQ–Q inputs are not presented, due to small variability in the results (as mentioned above in the comments to Table 8). This leads to very similar shapes of the scatter plots.

Observed runoff values are on the X-axis of the scatter plot, and the simulated runoff values are given on the Y-axis. The charts in the figures confirm the results of the criteria table, but the underestimation of large runoff values on three catchments, Chehalis River, Smith River and Linville River, is evident in the P–Q scatter plots. This effect is model approach independent. However, the correspondence between measured and simulated runoffs is closest in GPF and is less close in GP and ANN for all catchments except the Linville River, where all models fail to explain the rainfall-runoff relations.

The examples of observed and simulated time series of runoff are shown in Fig. 2. These graphs show the simulation results of the best model from the samples for all catchments and methods (P–Q case). The runoff observed and simulated data for year 2001 (the last complete year of each validation series) are presented. The black points are the observed values, solid grey lines are simulated values. Although this is only short section of validation datasets, there can be seen that ANN model results are slightly overestimated, whereas GP and GPF simulated values seems to be underestimated. Generally this trend is more apparent in the scatter plots (especially for Chehalis and Smith R. catchments).

Box plots of the P–Q samples are shown in Fig. 3, and box plots of the PQ–Q samples in Fig. 4. The standard box plots as defined e.g. in [43], indicate the distribution of the NS value in 100 runs of ANN, GP and GPF. The outliers are not drawn.

The charts for the P–Q case shows the better efficiency of GPF on the samples for all tested catchments. Simple GP also outperformed ANN.

There is a different situation for PQ–Q. In this case, the results are balanced and the better model is decided on the basis of the Wilcoxon test. An alternative hypothesis is that the true location shift is less than 0. The p values are shown in Table 9. At the 95 % confidence level, GPF is better than ANN on three catchments and better than GP on four catchments. ANN has lowest variability, whereas the GP and GPF box vary quite widely. This can be due to the static model structure of ANN, while the GP model and the GPF model are assembled dynamically, so the derived models in the sample cover a wide range of simulated values.

The box plot results show that GPF is significantly better when there is a lack of information to explain the process, i.e. the runoff is explained only from the rainfall history. When information about the antecedent runoff is provided for the models, the efficiency of all approaches is rather similar. The variability in the samples is smallest for ANN and larger for GP and for GPF.

The progress of fitness in the P–Q and PQ–Q modelling samples is shown in Figs. 5 and 6, respectively. In these figures, the solid line indicates the sample median, the dashed line indicates the minimum value, the dotted line shows maximum fitness value (note that the criterion is NS0 – the smaller the better). Black colour is used for GPF fitness values, and grey colour for GP fitness values.

For both modelling cases there is a significant improvement in the progress of fitness on the Chehalis River and Leaf River catchments. The improvement for P–Q is already significant from the initial generations. On the other catchments, the fitness values of the GPF models remain better, but the differences are less significant.

What is more interesting, however, is the convergence speed. The speed improves significantly on the EFW and Leaf River catchments for P–Q, and on the Chehalis River and Leaf River catchments for PQ–Q.

The convergence of the fitness of the model is positively influenced in the GPF approach. This is probably due to the nature of the special functions, which are more complex, are problem oriented, and allow the self optimised history of the process to be used. Memory of the process is a crucial element in rainfall-runoff modelling. In addition, more complex functions are probably dominant functions in the optimisation due to their influence on modelling efficiency and therefore their influence on the fitness of individuals. The survival of individuals with these functions is higher, so the function can be optimised precisely over a longer period.

Analyses of GPF derived models (the best models per catchment and per modelling case) show that lag determination by GP optimisation provides lag values that are similar to or higher than when statistical methods are used. Using GPF on the Leaf River input, the lag values optimised for use in special functions were same as the values determined for the GP and ANN methods. On Smith River, Linville River and Chehalis River, the lag values were slightly higher, and on EFW River the lag value was lower.

7 Conclusion

This paper has introduced a combination of GP and conceptual models. The performance of GPF has been tested in modelling runoff one-day forecasts with various inputs. The reference models were simple GP and ANN.

The results presented here show that a combination of GP and conceptual models is a viable approach in rainfall-runoff modelling. When rainfall is the only input, GPF was the most accurate modelling technique, and it outperformed the reference modelling methods, ANN and GP. Nevertheless only four catchments were used in this study and more work is needed, to verify that GPF works generally better then reference methods.

When previous runoffs are included in the model inputs, the performance of the tested modelling approaches is quite similar.

It can be concluded that GPF has been able to model the rainfall-runoff process more precisely than reference methods, when there is less information in the inputs. If the inputs contain more information, the benefit of GPF is uncertain. More tests on more catchments are needed for a decision on the performance of GPF for PQ–Q modelling case.

However, the GPF approach benefits the estimation of the input history through the optimisation itself, and leads to the fastest convergence of optimisation.

Further work will be done on testing GPF on more catchments, especially catchments in the Czech Republic, where the danger of floods is higher. We will also focus on data balancing as a possible technique for improving the performance of the models.

References

Poli R, Langdon WB, McPhee NF (2008) A field guide to genetic programming. Published via http://lulu.com and freely available at http://www.gp-field-guide.org.uk (with contributions by Koza JR)

Koza JR (1992) Genetic Programming: on the programming of computers by means of natural selection. MIT Press, USA

Eiben AE, Smith JE (2003) Introduction to evolutionary computing. Springer, Berlin, p 320

Langdon WB, Poli R (2002) Foundations of genetic programming. Springer, Heidelberg

Bäck T, Fogel D, Michalewicz Z (2000) Evolutionary computation 1: basic algorithms and operators. Institute of Physics Publishing, Bristol

Babovic V (1996) Emergence, evolution, intelligence: hydroinformatics. PhD thesis, International Institute for Infrastructural, Hydraulic and Environmental Engineering and Technical University Delft, The Netherlands (Published by A. A. Balkema Publishers)

Babovic V, Abbott MB (1997) The evolution of equations from hydraulic data part i: theory. J Hydraul Res 35(3):397–430

Babovic V, Abbott MB (1997) Evolution of equations from hydraulic data. part ii: applications. J Hydraul Res 35(3):411–430

Cousin N, Savic DA (1997) A rainfall-runoff model using genetic programming. Technical report (report no. 97/03), School of Engineering, University of Exeter

Drecourt JP (1999) Application of neural networks and genetic programming to rainfall runoff modelling. Techical report, Danish Hydraulic Institute (D2K–0699-1)

Savic DA, Walters GA, Davidson JW (1999) A genetic programming approach to rainfall-runoff modelling. Water Resour Manag 13(3):219–231

Babovic V, Keijzer M (2002) Rainfall-runoff modelling based on genetic programming. Nordic Hydrol 33(5):331–346

Liong SY, Gautam RR, Khu ST, Babovic V, Keijzer M, Muttil N (2002) Genetic programming: a new paradigm in rainfall runoff modeling. J Am Water Resour Assoc 38(3):705–718

Jayawardena A, Muttil N, Fernando T (2005) Rainfall-runoff modelling using genetic programming. In: Zerger A, Argent R (eds) Proceedings of the MODSIM 2005 international congress on modelling and simulation: advances and applications for management and decision making. Melbourne, Australia, pp 1841–1847

Jayawardena AW, Muttil N, Lee JHW (2006) Comparative analysis of data-driven and gis-based conceptual rainfall-runoff model. J Hydrol Eng 11(1):1–11

Rabuñal JR, Puertas J, Suarez J, Rivero D (2007) Determination of the unit hydrograph of a typical urban basin using genetic programming and artificial neural networks. Hydrol Process 21(4):476–485

Makkeasorn A, Chang N, Zhou X (2008) Short-term streamflow forecasting with global climate change implications—a comparative study between genetic programming and neural network models. J Hydrol 352(3–4):336–354

Londhe S, Charhate S, Londhe S, Charhate S (2009) Towards modelling of streamflow using soft tools, vol 331. IAHS-AISH Publications, India, pp 245–253

Wang WC, Chau KW, Cheng CT, Qiu L (2009a) A comparison of performance of several artificial intelligence methods for forecasting monthly discharge time series. J Hydrol 374(3–4):294–306

Watanabe N, Fukami K, Imamura H, Sonoda K, Yamane S (2009) Flood forecasting technology with radar-derived rainfall data using genetic programming. In: Proceedings of the 2009 international joint conference on Neural Networks, IJCNN’09, IEEE Press, Piscataway, NJ, USA, pp 776–783

Ni Q, Wang L, Ye R, Yang F, Sivakumar M (2010) Evolutionary modeling for streamflow forecasting with minimal datasets: a case study in the West Malian river, China. Environ Eng Sci 27(5):377–385

Kashid S, Ghosh S, Maity R (2010) Streamflow prediction using multi-site rainfall obtained from hydroclimatic teleconnection. J Hydrol 395(1–2):23–38

Elshorbagy A, Corzo G, Srinivasulu S, Solomatine D (2010) Experimental investigation of the predictive capabilities of data driven modeling techniques in hydrology—part 1: concepts and methodology. Hydrol Earth Syst Sci 14(10):1931–1941

Elshorbagy A, Corzo G, Srinivasulu S, Solomatine D (2010) Experimental investigation of the predictive capabilities of data driven modeling techniques in hydrology—part 2: application. Hydrol Earth Syst Sci 14(10):1943–1961

Londhe S, Charhate S (2010) Comparison of data-driven modelling techniques for river flow forecasting. Hydrol Sci J 55(7):1163–1174

Maheswaran R, Khosa R (2011) Multi resolution genetic programming approach for stream flow forecasting. Lecture notes in computer science 7076 LNCS (part 1), pp 714–722

Rabuñal JR, Puertas J, Rivero D, Fraga I, Cea L, Garrido M (2011) Genetic programming for prediction of water flow and transport of solids in a basin. In: Proceedings of the 4th international conference on interplay between natural and artificial computation: new challenges on bioinspired applications, vol part II, IWINAC’11, Springer, Berlin, Heidelberg, pp 223–232

Whigham PA, Crapper PF (2001) Modelling rainfall-runoff using genetic programming. Math Comput Modell 33(6–7):707–721

Aytek A, Asce M, Alp M (2008) An application of artificial intelligence for rainfall-runoff modeling. J Earth Syst Sci 117(2):145–155

Guven A (2009) Linear genetic programming for time-series modelling of daily flow rate. J Earth Syst Sci 118(2):137–146

Fernando A, Shamseldin A, Abrahart R (2011) Comparison of two data-driven approaches for daily river flow forecasting. In: Proceedings of the MODSIM2011, 19th international congress on modelling and simulation, pp 1077–1083

Seckin N, Guven A (2012) Estimation of peak flood discharges at ungauged sites across Turkey. Water Resour Manag 26:2569–2581

Wang WC, Xu D, Qiu L, Ma J (2009b) Genetic programming for modelling long-term hydrological time series. Int Conf Nat Comput 4:265–269

Schaake J, Cong S, Duan Q (2006) US MOPEX data set. IAHS Publ Ser 307:9–28

R Development Core Team (2011) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. http://www.R-project.org, ISBN: 3-900051-07-0

Nash J, Sutcliffe J (1970) River flow forecasting through conceptual models part i—a discussion of principles. J Hydrol 10(3):282–290

Luke S, Panait L (2002) Fighting bloat with nonparametric parsimony pressure. In: Proceedings of the 7th international conference on parallel problem solving from nature, PPSN VII, Granada, Spain, 7–11 Sept 2002, Springer, Berlin, pp 411–421

Kitanidis P, Bras R (1980) Real-time forecasting wih a conceptual hydrologic model. 2. Applications and results. Water Resour Res 16(6):1034–1044

Vladislavleva E, Smits G, den Hertog D (2010) On the importance of data balancing for symbolic regression. IEEE Trans Evolut Comput 14(2):252–277

Eggermont J, Hemert JIv (2001) Adaptive genetic programming applied to new and existing simple regression problems. In: Proceedings of the 4th European conference on genetic programming, EuroGP’01, Springer, Berlin, pp 23–35

Silva S, Almeida J (2003) Dynamic maximum tree depth-a simple technique for avoiding bloat in tree-based GP. In: Cantú-Paz E, Foster J, Deb K, Davis L, Roy R, O’Reilly UM, Beyer HG, Standish R, Kendall G, Wilson S, Harman M, Wegener J, Dasgupta D, Potter M, Schultz A, Dowsland K, Jonoska N, Miller J (eds) Genetic and evolutionary computation—GECCO 2003, lecture notes in computer science, vol 2724, Springer, Berlin/Heidelberg, pp 210–210

Havlíček V (2011) SORD!—GP tool for hydrological modelling. http://www.kvhem.cz/vyzkum/software/

Tukey JW (1977) Exploratory data analysis. Addison-Wesley, Reading, p 688

Acknowledgments

Financial support from the Technology Agency of the Czech Republic (research project TA02021249) is gratefully acknowledged. The authors wish to acknowledge the MOPEX project staff, which are associated with data providing and management.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Havlíček, V., Hanel, M., Máca, P. et al. Incorporating basic hydrological concepts into genetic programming for rainfall-runoff forecasting. Computing 95 (Suppl 1), 363–380 (2013). https://doi.org/10.1007/s00607-013-0298-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00607-013-0298-0